Authors: Vivek Nair, Gonzalo Munilla-Garrido, Dawn Song

Introduction

Imagine diving into the vibrant world of virtual reality, where the lines between the digital and physical realms blur, and you’re free to explore endless possibilities. The metaverse promises to revolutionize how we interact with technology, making the immersive experiences of VR the next big leap in human-computer interaction. However, with great innovation comes great responsibility, and the burgeoning virtual landscape is no exception. Recent studies have revealed a troubling reality: VR users can be easily profiled and deanonymized, putting your privacy at significant risk.

If you think the internet has its privacy pitfalls, the metaverse might make you rethink your digital safety altogether. While we’re used to protective measures on the web, such as incognito browsing, these defenses are conspicuously absent in the VR domain—until now.

The paper presents the first-ever “incognito mode” for VR, a solution designed to safeguard sensitive information. The technique employs local 𝜺-differential privacy, a method to obscure personal data in a way that strikes the perfect balance between privacy and usability. In simple terms, the authors’ approach intelligently injects noise into the data, making it significantly harder for anyone to pinpoint personal details. It ensures you get the best possible protection without compromising your immersive experience.

The authors developed this privacy shield as a universal Unity (C#) plugin, integrating it with popular VR applications. To test its effectiveness, they meticulously evaluated their approach in popular VR applications. The results? A remarkable drop in attackers’ ability to breach your privacy when the proposed solution is active.

The sensitive attributes of a user that the authors aim to secure

- Anthropometrics: height, wingspan, arm lengths, interpupillary distance (IPD), handedness, reaction time.

- Environment: room size

The Technique

Additive Offset

Additive offset is used to protect those attributes that remain static in a game session, for example, IPD and voice pitch.

IPD doesn’t change during a session, making it straightforward to defend. By scaling the avatar’s size, the distance between the eyes reflects a differentially private value, preventing accurate profiling based on IPD.

Voice pitch is another static attribute that can be offset. Adjusting the pitch with a differentially private value hinders attackers from accurately identifying users based on their voice. This method also confuses machine learning models attempting to infer gender or ethnicity, enhancing user privacy.

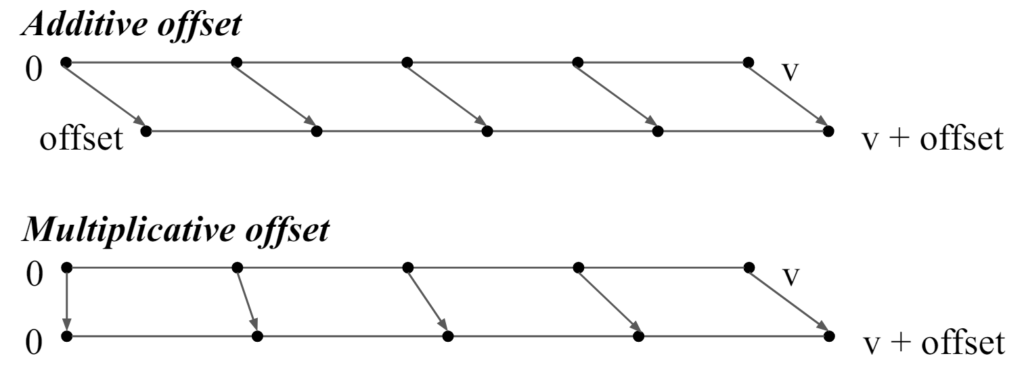

Figure 1: Local differential privacy for continuous numerical attributes with additive offset

Multiplicative Offset

We now focus on attributes that require a multiplicative offset for protection. Take height, for example, the value of height should be zero when the user lies down in a game session. However, if additive offset is used, it would give a value of zero + offset, clearly revealing the offset to the attacker. To make sure this does not happen, multiplicative offset is introduced.

distance between a user’s hands should read as zero when touching but should include an offset when fully extended. An additive offset isn’t sufficient; instead, we scale the values by a ratio (𝑣′/𝑣) to ensure the attributes have differentially-private values at their extremes while maintaining the zero point when the user touches the ground.

Figure 2: Additive vs. multiplicative offset transformations.

Using the multiplicative offset, the following attributes are given privacy: height, wingspan, arm length ratio, and room size. The algorithm is pretty straightforward to understand

Figure 3: Local differential privacy for continuous attributes with multiplicative offsets.

If you find it difficult to understand how 𝜺-differential privacy works, and how the paper computes LDPNoisyOffset(), here is the code taken from the paper.

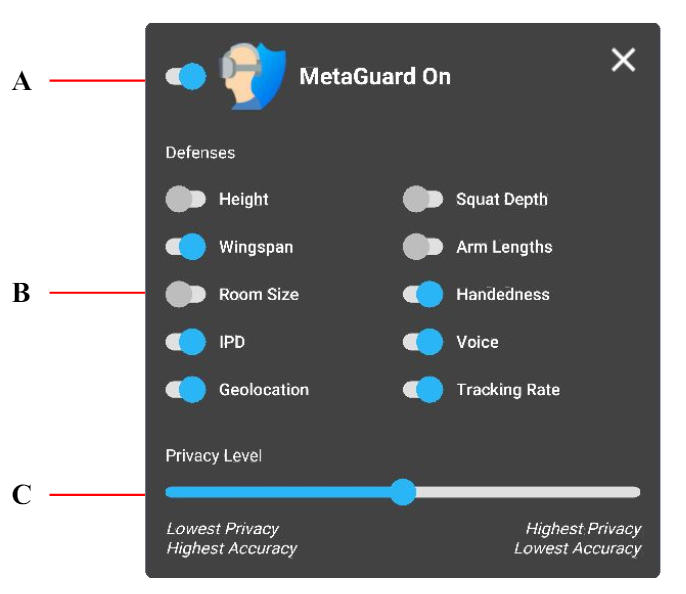

MetaGuard is a practical implementation of privacy defenses, offering the first “incognito mode” for the metaverse. Developed as an open-source Unity (C#) plugin, it can be easily integrated into any VR application using MelonLoader. Here’s an overview:

Key Features:

- Master Toggle: A single switch to activate incognito mode with default settings.

- Feature Toggles: Enable or disable individual defenses as needed. For example, in Beat Saber, users might disable wingspan and arm length defenses.

- Privacy Slider: Adjust the level of privacy (𝜀) to balance between privacy and accuracy.

Privacy Settings:

- High Privacy: For virtual telepresence applications like VRChat.

- Balanced: For casual gaming requiring some dexterity, like virtual board games.

- High Accuracy: For competitive games that are sensitive to noise, like Beat Saber.

Figure 4: VR user interface of MetaGuard plugin