This is a common practice today that companies are outsourcing their ML tasks to the public clouds. These tasks sometimes involve sensitive data (health and finance, for example) and output. Now think of a cloud party which might be a malicious one to sell the sensitive data to other companies. Alongside there might be malware present in the cloud to tamper the data or steal it.

The most pragmatic solution to this problem is to introduce a special processor in the cloud that is totally isolated. In other words, all the computations and data present inside that processor are safe from the other parties in the cloud. A popular example of this processor is the Intel SGX. This ensures:

1. Integrity: cloud can not tamper the computations

2. Privacy: cloud does not learn about inputs and outputs

3. Model Privacy: cloud does not learn about the model

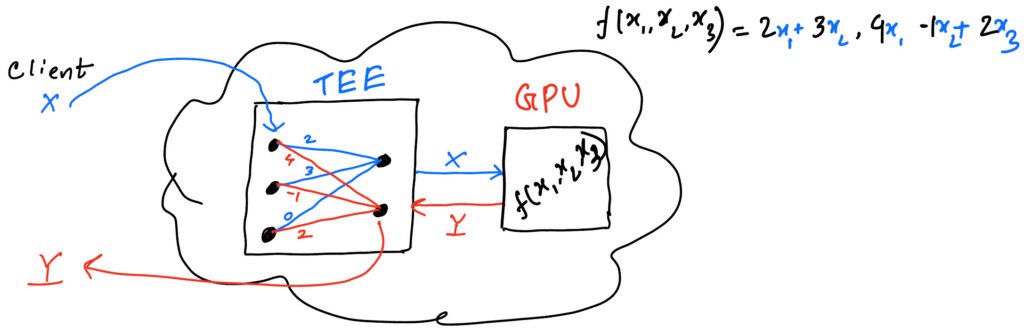

The problem with this isolated CPU is that it does not have any access to the GPU or TPU, which is an important aspect for ML tasks as this makes the training and inference faster. On the other hand, the hardware with GPU/TPU access in the cloud is not a safe place as it is not isolated. This paper comes in to fill the gap. This paper ensures

1. integrity and privacy properties by hardware isolation

2. incorporating faster hardware GPU (which is not trusted) by outsourcing from isolated hardware using some cryptographic primitives(still maintaining integrity and privacy of the computation).

3. compromising model privacy to make the computation faster still maintaining the integrity and privacy of the computation

Enough talks. Now let’s illustrate the idea…

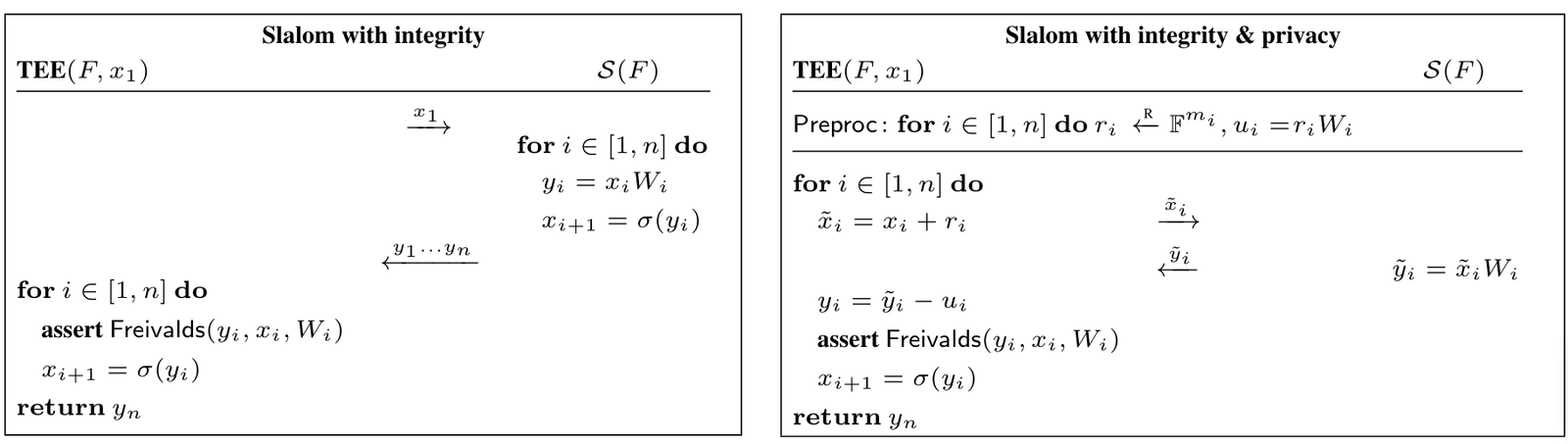

Suppose this is a trained model which is in the cloud. The linear computation f(x) will be outsourced to the GPU to make the heavy computation faster. The isolated hardware or the Trusted Execution Environment (TEE) does the following things

1. Encrypt X to X’ (Privacy)

2. Decrypt Y’ to Y (Privacy)

3. Check whether Y is correct output after multiplication of X and W (Integrity)

4. Perform non-linear operations to generate X for next layer

NB: As the model’s privacy is compromised, the model weight (W) is known to both the TEE and the cloud server.

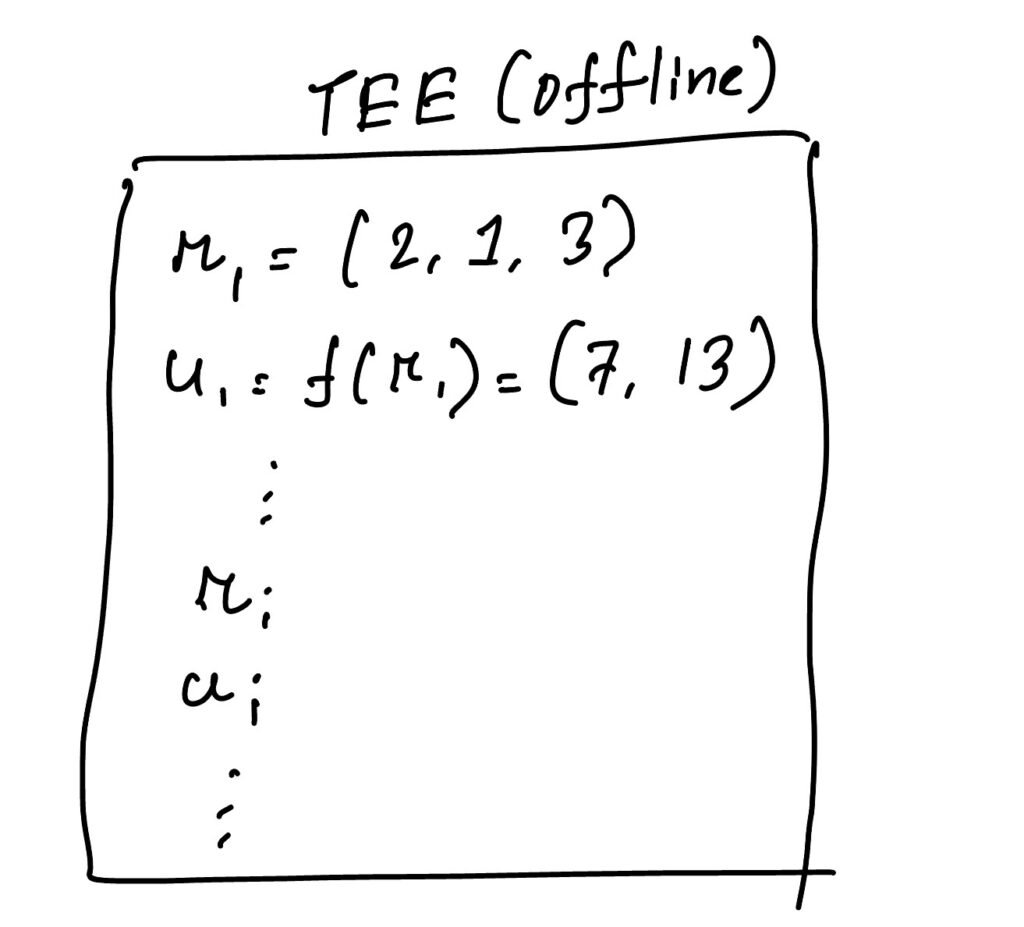

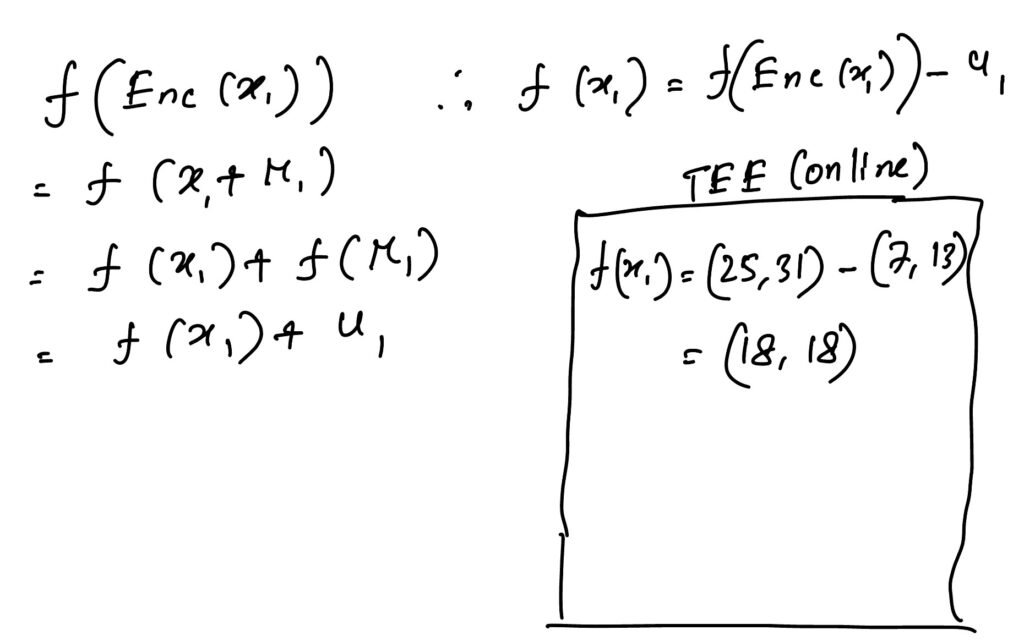

To facilitate these tasks, TEE has to go through some techniques. At first, In the offline stage (when there are not many requests from clients), the TEE generates a stream of pseudorandom elements r and precomputes f(r).

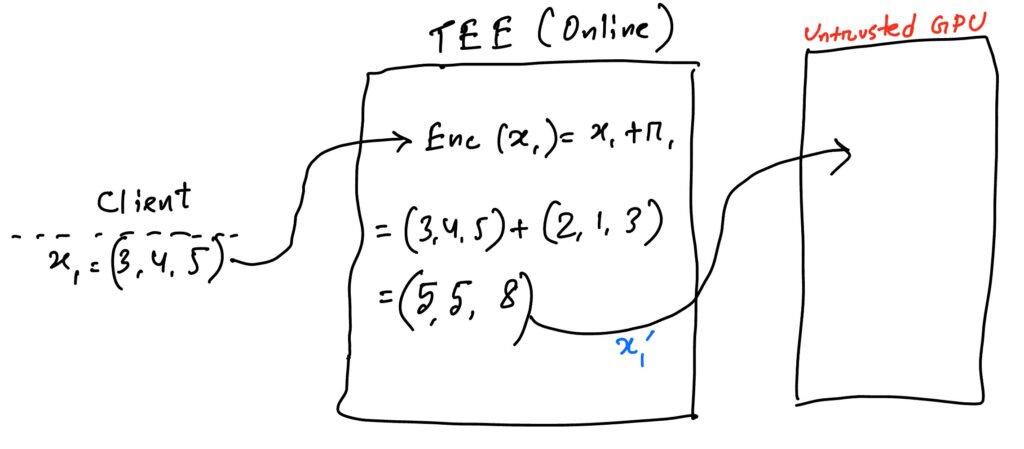

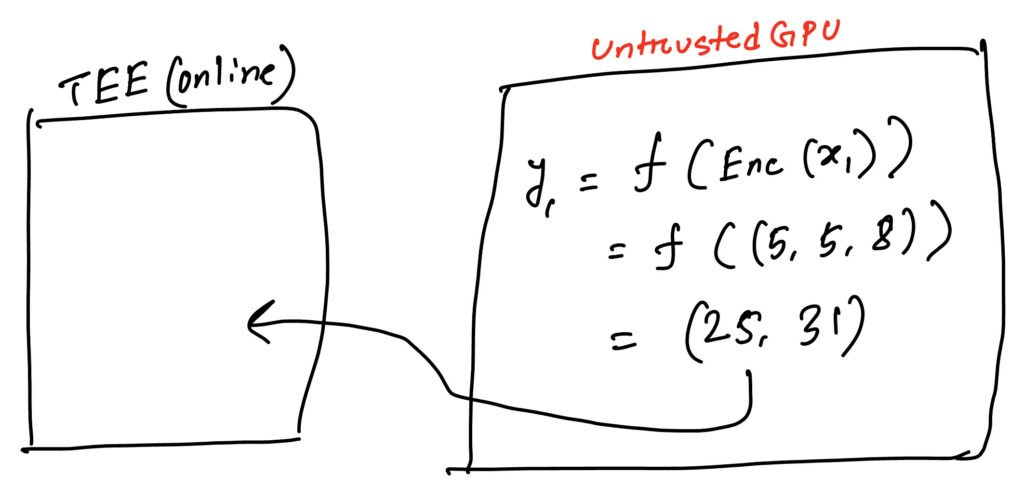

Then in the online phase, when the client sends an input, x1=(3,4,5). The TEE then performs encryption of x1 by Enc(x1). The encrypted x1 is then outsourced to untrusted GPU as x1‘.

The untrusted GPU receives the Enc(x1), calculates the f(Enc(x1)) and sends back the already encrypted result y1‘ to the TEE.

Then the process involves decrypting y1 from y1‘. Keep in mind y1‘ = f(Enc(x1)).

After that, the integrity check comes into place to make sure whether y1 is generated from the multiplication of x1 and W. This is done using the Freivald’s algorithm. A possible time complexity of this method is O(n2.8874) using Stression’s matrix multiplication.

After that, the TEE computes the non-linear operation, for example, sigmoid(y1) to generate input for next layer in a deep neural network. For the next layer, the process follows the same steps.

NB: Crypto protocols are efficient in outsourcing linear functions. However, in outsourcing non-linear functions, the overhead become impractical. That’s why, non-linear functions are not outsourced in this paper.