Authors: Yicheng Zhang, Carter Slocum, Jiasi Chen and Nael Abu-Ghazaleh

Introduction

With the rise of Augmented Reality (AR) and Virtual Reality (VR) systems, concerns about security and privacy have become more prominent for both researchers and industry professionals. This study reveals that AR/VR systems can be susceptible to attacks from malicious software. Such software, even without special permissions, can gather private information about user activities, interactions with other applications, or details about the surrounding environment.

The researchers developed several types of attacks to demonstrate these vulnerabilities. They showed that a malicious application could accurately detect and record hand gestures, voice commands, and keystrokes on a virtual keyboard, with more than 90% accuracy. Additionally, they demonstrated that an attacker could identify which application a user is opening and even estimate the distance of a bystander in the real world with a small margin of error.

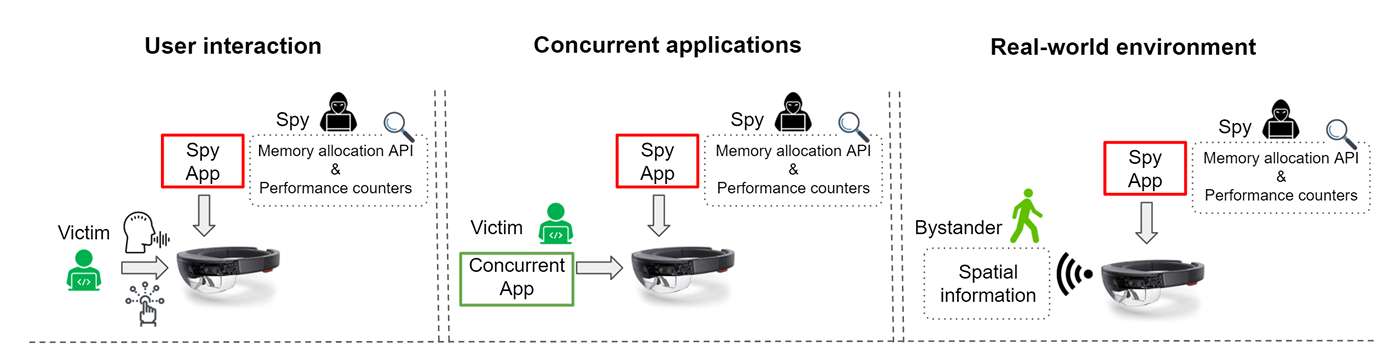

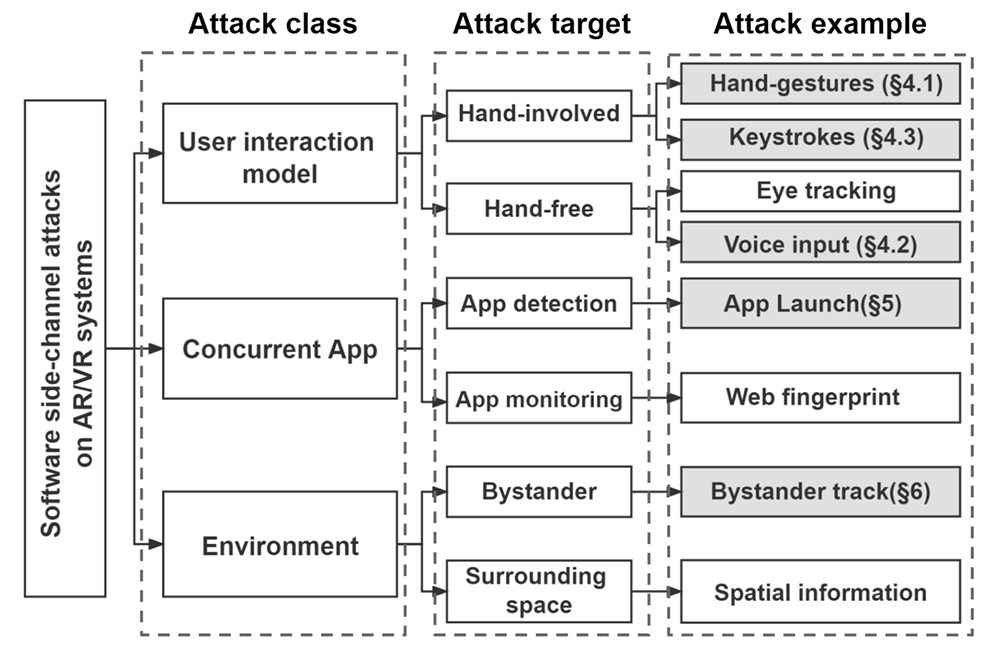

In this work, the authors demonstrate five specific attacks from Fig. 1 (shaded boxes): hand gestures, voice input, keystrokes, app launch, and bystander ranging. These five attacks occur under three scenarios, shown in Fig. 2.

Figure 1: Taxonomy of software side-channel attacks on AR devices. The shaded attack examples specifically are demonstrated in the paper.

Figure 2: Three attack scenarios are considered in this work

Indexing of the attack

- Attack 1: Hand gestures inference

- Attack 2: Voice commands inference

- Attack 3: Keystroke monitoring

- Attack 4: Concurrent app fingerprinting

- Attack 5: Bystanderranging

Experimental Setup

The authors demonstrate the attacks on 2 devices

- Microsoft Hololens 2 (a Windows-based AR headset)

- Meta Quest 2 (an Android-based VR headset)

The malicious spy applications were designed using both

- Unity version 2020.3.16f1 (Attacks: 1, 2, 5)

- Unreal Engine version 4.27.2 (Attacks: 3, 4)

Attack 1: Hand gestures inference

Users can interact with digital artifacts (e.g., holograms) in the environment either directly or with hand gestures. We f irst describe hand gesture inputs on the Hololens 2 and Meta Quest 2, and then our attacks and evaluations.

Hand Gestures on Hololens 2:

- Touch: Directly touching holographic contents with the index finger, indicated by a white touch cursor.

- Air Tap: Interacting with distant holograms via a hand ray by pinching and releasing thumb and index finger.

- Start Menu Gesture: Opens the start menu by pointing at the inner wrist with the palm facing outward.

- Scale Up: Resizing holograms by grabbing and stretching their corners.

- Scale Down: Similar to Scale Up, used for shrinking holograms.

Hand Gestures on Meta Quest 2:

- Palm Pinch: Holding thumb and index finger together to trigger the start menu.

- Point and Pinch: Similar to Air Tap on Hololens, for selecting distant holograms.

- Touch: Same as the Touch gesture on Hololens 2.

- Scale Up: Identical to the Scale Up gesture on Hololens 2.

- Scale Down: Identical to the Scale Down gesture on Hololens 2.

The Technique

Imagine using a virtual reality headset to interact with holograms. When you touch a hologram with your finger, the device’s graphics system gets busy processing that action. This activity can be measured by something called “Vertex count,” which tells us how many points are being processed to create the image.

Now, think of a spy program running quietly in the background. This program can observe the Vertex count. For example, when you perform a “Touch” gesture, the Vertex count might jump from 1000 to a higher number because the device needs to render your finger and the hologram interaction.

Different gestures, like “Air Tap” or opening the start menu, will create different jumps in the Vertex count. By watching these changes, the spy program can figure out which gestures you’re making, even though it’s not supposed to have this information.

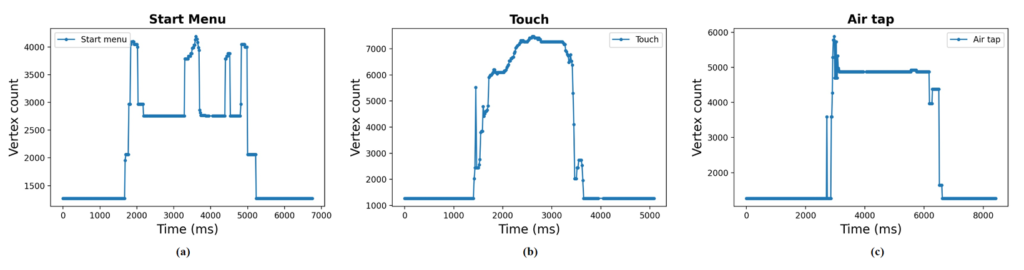

Rendering Performance Counter Observations:

- Start Menu, Touch, and Air Tap Gestures:

- Vertex count is stable around 1000 before gestures are performed.

- During hand gestures, the Vertex count increases as the device renders hands in the immersive environment.

- Start Menu Gesture: Generates fewer vertices compared to Touch and Air Tap.

- Touch Gesture: Generates more vertices than Air Tap due to direct interaction with 3D objects.

Figure 3: Performance counter traces for hand gestures on Hololens 2: (a) Start menu; (b) Touch; and (c) Air tap.

Attack 2: Voice commands inference

Let’s first briefly describe the built-in voice commands for both on the Quest and Hololens headsets.

Built-in Voice Input Commands on Hololens 2:

- “Go to Start”

- “Take a picture”

- “Start/stop recording”

- “Turn up/down the volume”

- “Show/hide hand ray”

Built-in Voice Input Commands on Meta Quest 2:

- User initiates a voice command by double-clicking the home button on the right controller.

- “Take a photo”

- “Start/stop recording”

- “Turn up/down the volume”

- “Reset view”

- “Start casting”

The Technique

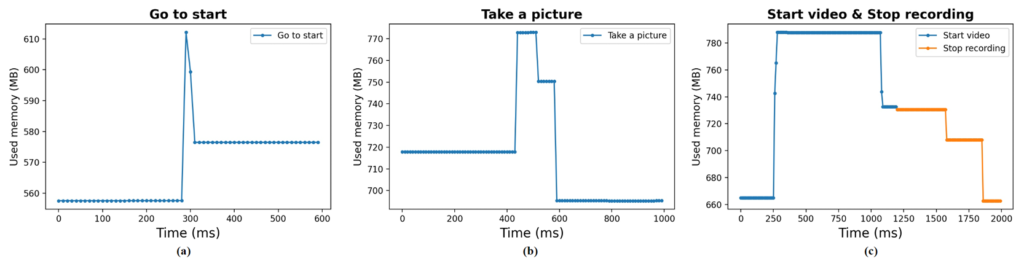

Different voice commands cause the spy program to use different amounts of memory. For example, when you say “Go to Start” or “Take a picture” on your AR/VR device, the spy program’s memory usage suddenly increases (Fig. 4). This spike shows that the command was triggered. We think this happens because the command shares a memory space with the spy program. The memory used varies by command: “Start/stop recording” uses the most (780 MB), while “Go to Start” uses less (610 MB).

So, by watching these memory changes, the spy program can tell which voice command you used.

Figure 4: Performance counter traces as a user speaks different voice commands: (a) Go to start; (b) Take a picture; and (c) Start video and Stop recording.

Attack 3: Keystroke monitoring

When using a virtual keyboard in an AR/VR environment, each keystroke requires different hand and finger movements, creating unique patterns. A spy program can detect these patterns to figure out what keys are being pressed. For example, as a user types sensitive information like credit card numbers, a malicious app running in the background can monitor performance data and identify each keystroke based on these unique patterns. This approach doesn’t need access to WiFi signals or physical infrastructure, making it a stealthy and effective method of capturing sensitive information.

The Technique

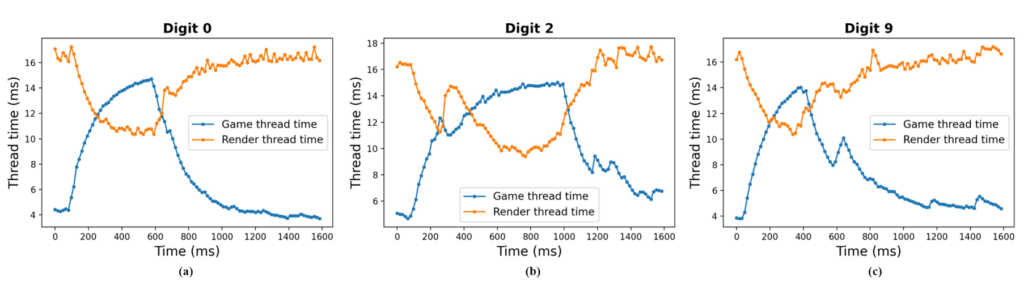

When using AR/VR devices, different hand movements (like touching different digits) create unique patterns in how the device’s internal processes work. For example, touching the digits 0, 2, and 9 each results in different behaviors in the device’s performance (Fig. 5).

There are two main tasks the device handles: the “game thread,” which manages most of the app’s logic, and the “render thread,” which handles drawing the visuals.

Imagine that the device is tracking your hand movement. When you touch a digit, the “game thread” spends more time processing this action, so its activity level goes up. At the same time, the “render thread” spends less time updating visuals, so its activity level goes down.

This means that when you perform a touch gesture, the device shifts some of its resources from drawing visuals to tracking your hand, causing the game thread time to increase and the render thread time to decrease. By observing these changes, someone could infer what digit you touched.

Figure 5: Performance counter traces when a user inputs different digits on a virtual keyboard: (a) 0, (b) 2, and (c) 9.

Attack 4: Concurrent app fingerprinting

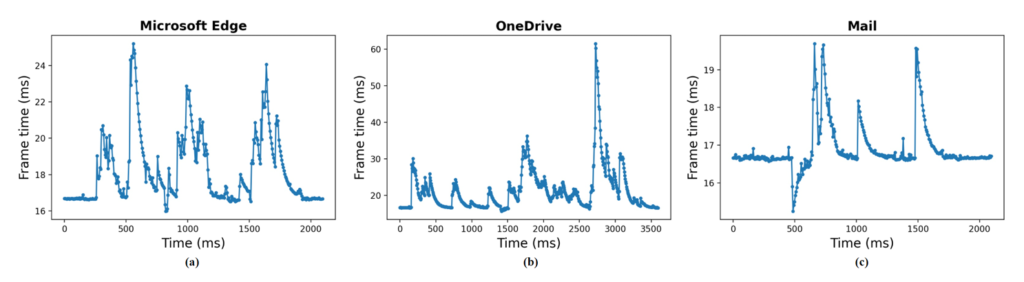

In this scenario, an attacker uses side-channel information to figure out which other apps are running on a device. This attack, called “Concurrent App Fingerprinting,” allows the attacker to identify apps being opened, which can reveal user activity and potentially help with other attacks like phishing.

For example, on the Hololens, different apps create unique patterns when they start up. A spy program running in the background can detect these patterns and identify the apps. However, this attack doesn’t work on the Quest 2 headset because it restricts most apps from running simultaneously, though this could change in the future.

The Technique

When a user launches an app on a device, a system process called backgroundTaskHost manages the start-up. This takes a few seconds, during which the app competes for system resources with any background spy program. This competition causes noticeable changes in the spy program’s performance, creating a unique “signature” for each app.

For example, as the user opens different apps, the spy program’s CPU/GPU frame latency increases differently for each one. By observing these changes, the spy program can identify which app is being launched. Fig. 6 illustrates this by showing distinct performance patterns for three different apps during their launch. Each app creates a unique pattern because they have different graphics content to render, even though they are displayed in the same-sized windows.

Figure 6: Performance counter traces when launching applications: (a) Microsoft Edge; (b) OneDrive; and (c) Mail.

Attack 5: Bystander ranging

An attacker can use an AR device to gather information about the environment it sees. For example, by analyzing processing patterns, the attacker can estimate the distance of a person walking by the device. This turns the AR device into a surveillance tool, raising privacy concerns for people nearby.

Although the raw spatial data from the device is usually encrypted and inaccessible, side-channel information from performance counters can still reveal details. This means an attacker can figure out how far a bystander is from the AR device by looking at the changes in the device’s performance during different activities. This type of attack, called “bystander ranging,” highlights potential privacy risks for people near AR/VR users.

The technique

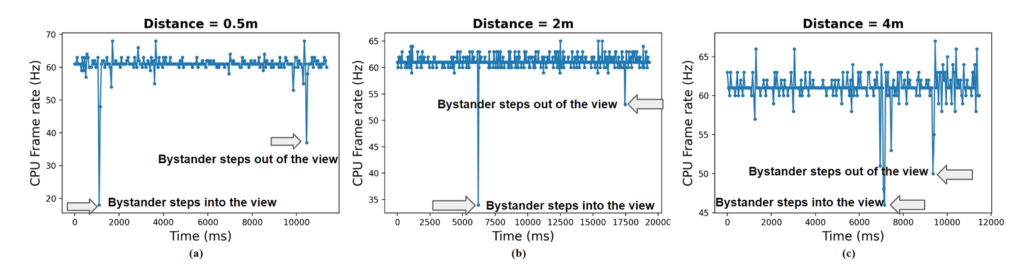

In this attack, a hidden spy program on a Hololens 2 monitors performance counters to detect when a bystander steps into the device’s view. The program uses a toolkit that forces the Hololens to create detailed spatial maps of the environment, which happens without the user’s explicit permission because it’s a normal function of the device.

Example:

- Bystander Detection: When a person steps into the Hololens’ view, the device processes their presence by creating a mesh, causing the CPU frame rate to drop from 60 to 18. This drop indicates someone is in view.

- Bystander Leaving: When the person steps out of view, the mesh is removed, and the CPU frame rate drops again from 60 to 37, signaling the person has left.

The spy program can track these frame rate changes to monitor when someone enters and exits the field of view.

Distance Impact:

The program also checks how close the person is. Closer objects cause a larger drop in the CPU frame rate. For instance, at 0.5 meters, the frame rate drops to 18, but at 2 meters, it only drops to 35. This helps the spy estimate how far away the person is.

Accuracy:

The attack uses machine learning to predict distances, achieving an accuracy within 10.3 cm. However, this is a proof-of-concept and works best in known environments; more testing is needed for different settings and with headset movement.

Figure 7: Side-channel leakage pattern for a bystander moving into view at different distances: (a) Distance = 0.5m; (b) 2m; (c)4m. As the bystander becomes closer, the CPU frame rate drops more

Potential Mitigations

To protect AR/VR systems from side-channel attacks, two main strategies can be used: managing access to performance counters and detecting suspicious activity.

1. Managing Access to Performance Counters:

- Access Control: Only the current active app should access performance counters, but it’s hard to determine which app is active since multiple apps might be visible in AR/VR environments.

- Reduced Precision: Lowering the precision or rate of performance counters can make spying harder but might affect legitimate apps’ functionality.

- Permissions System: Users could set permissions for apps accessing performance counters, similar to how they manage permissions for other sensor data. However, this approach might confuse users and be hard to implement effectively.

2. Detecting Suspicious Activity:

- Monitoring Resource Contention: Detecting unusual usage patterns or repeated access to performance counters can indicate an attack. Tools could be used to monitor and flag these behaviors, triggering defenses to disrupt the spy’s activity. However, this method might lead to false alarms and additional overhead.

By implementing these strategies, AR/VR systems can better protect user privacy and prevent malicious applications from exploiting side-channel information.

If you would like to learn more about this paper, please find it here.

Thank you